Boost Your Website’s Pagespeed Performance With Caching Strategies

Implementing efficient caching strategies can boost the PageSpeed performance of your WordPress website by temporarily storing the static resources in the cache, which includes mechanisms such as browser caching and CDN caching.

Additionally, database caching and in-memory caching mechanisms can be included on the server side to improve a website’s pageSpeed performance.

Before diving into cache strategies, it’s essential to understand what caching is and its importance to your website’s performance. We will also explore different types of caching mechanisms and how RabbitLoader can help you with implementing these strategies without an expert or coding skills.

What Is Caching And How Does This Mechanism Work?

Caching is one of the essential optimization techniques that is used to store your website’s static content such as media files (images, videos, maps), CSS stylesheets, scripting files like JavaScript, and HTML files to improve the loading time of your website.

Get The Best PageSpeed Score

Let’s understand how it works. When a user visits a web page, the browser generally sends a request to the origin server. The server processes the browser’s HTTP request and provides a response to the user’s browser. This process can delay the loading speed, especially when the traffic is very high.

However, you can enhance your web page’s loading time by adding an efficient caching mechanism. When you use a caching mechanism, the browser request goes to the cache instead of the origin server. Here, two cases can be occurred:

- Cache Hit

Due to the availability of the requested content in the edge server, the requested content can be successfully retrieved from the CDN cache. This is known as a cache hit. By calculating the cache hit ratio, you can determine a cache’s efficiency. For more details, read the cache hit ratio blog.

- Cache Miss

A cache miss is one of the metrics of cache memory to determine the cache’s inefficiency. It happens when the requested content is not present in the edge server and the CDN cache can’t fulfill the browser’s request.

Type Of Caching

Several types of caching mechanisms are available to enhance the page speed performance. Let’s explore them.

- Browser Caching

Browser caching is one of the most common web optimization techniques for temporarily storing a website’s static content in the browser cache when a visitor opens the website for the first time.

Therefore, when the user re-opens the same webpage, the browser can render the cached content. By reducing this web page loading time, the browser cache can improve the website’s PageSpeed performance.

- CDN Caching

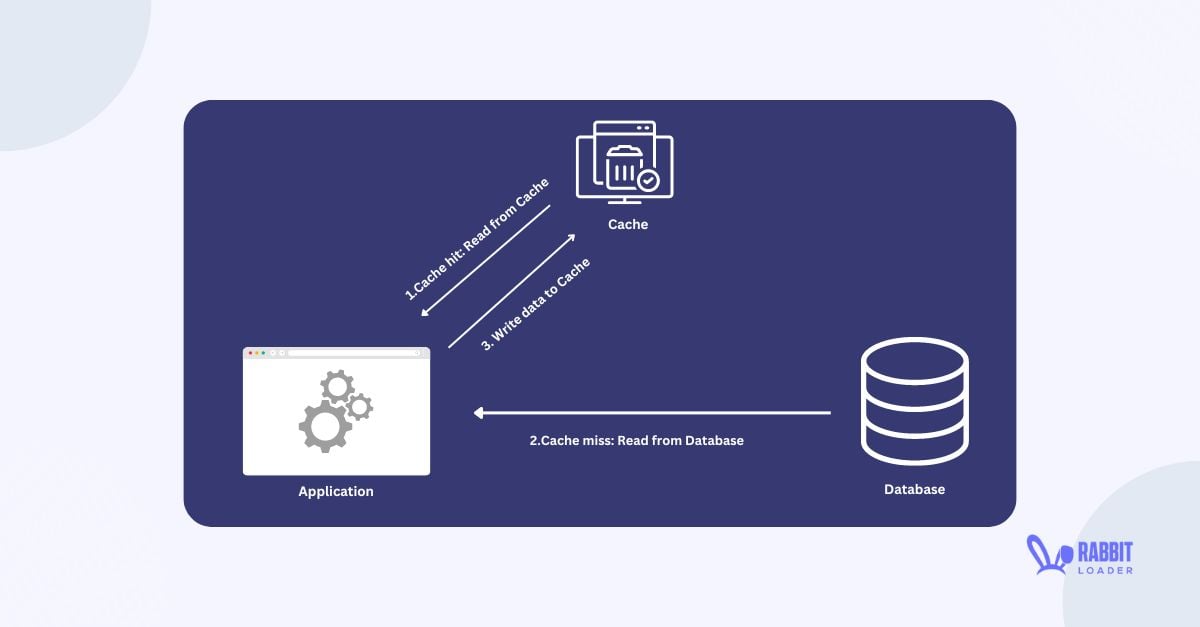

A high physical distance between the user and the origin can typically increase the loading time by increasing latency. CDN caching is the only solution to reduce the latency.

A CDN cache distributes frequently accessed resources to the edge servers. So, when a browser requests some content, the request goes to the proxy server instead of the origin server, improving the loading time.

- In-Memory Caching

In-memory database caching is used to minimize the number of queries by storing the frequently accessed data in the system’s RAM memory instead of the database. This in-memory caching is as volatile as the stored data can be lost if the system is restarted or shut down.

Why Do We Need Caching?

Now, you might wonder why we need a caching mechanism. Here, we will clear up any doubts about the importance of implementing this mechanism on your website.

- Improve PageSpeed Performance

Serving data from an efficient cache can significantly reduce the initial server responding time. This would boost all performance metrics, especially First Contentful Paint (FCP), Largest Contentful Paint(LCP), and Interaction to Next Paint (INP). Therefore, the PageSpeed of the website would automatically improve.

- Boost User Experience

When a user expects a fast-loading website, a slow website can harm the user experience, especially for mobile users. According to the thrive my way website, a website takes 87% longer to load its content on mobile than on desktop.

So, the optimization pageSpeed is more essential for mobile users. By caching the static content you can speed up your website. It would improve the user experience, resulting in the improvement of conversion rate as well as the Google rankings.

What Are The Caching Strategies

Caching strategies are methods used for managing how static content is stored and retrieved from the cache. They are used depending on the website’s requirements. Here we will discuss the most common 4 caching strategies which include:

- Cache-aside

- Write-through

- Write-behind

- Read through

Cache-Aside

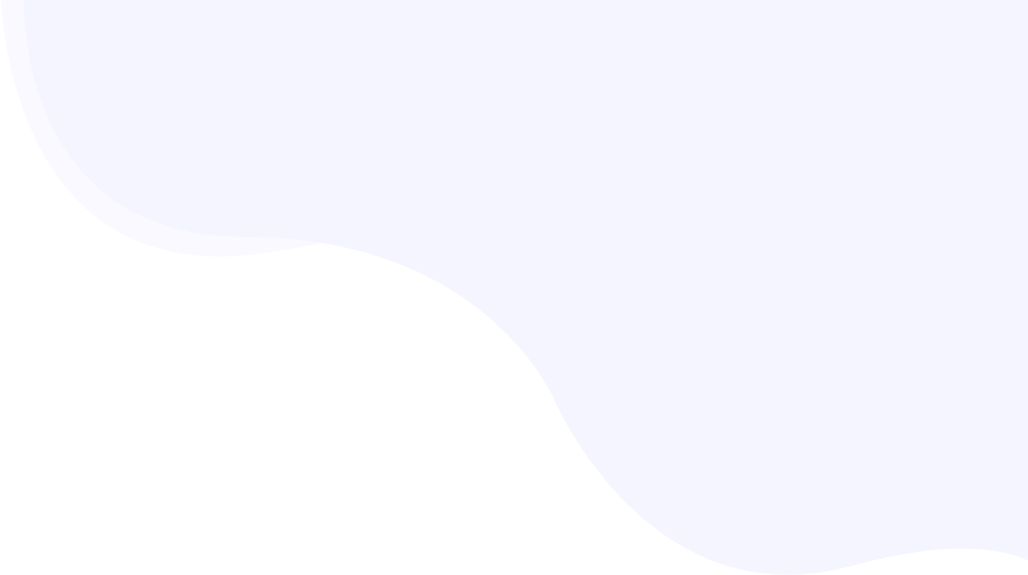

In the cache aside strategy, the browser’s HTTP request first goes to the cache memory, and if the requested content is present, a cache hit occurs. However, when the requested data is not available in cache memory, the data is retrieved from the origin server.

This strategy is a flexible strategy but it requires proper management to ensure that the data are always up-to-date.

Write-Through

In the write-through strategy, the data is written in cache memory and the origin server at the same time. In simple words, when data is updated on a website, it’s updated in both cache memory as well as the origin server simultaneously.

The advantage of this write-through strategy is that it ensures data consistency, which means that the data always remains up-to-date in a cache as well as the original database.

Write-Behind

In the write-behind caching strategy, when data is updated, it is first written into a cache memory and then later written in the original database. The key advantage of caching strategies is that they improve write performance by minimizing the write operation in the origin server.

Read Through

In a read-through caching strategy, the cache acts as a primary data source. When a data request is received in a cache, it first checks whether the data is present. If the requested data is unavailable in the cache memory, the cache itself retrieves it from the origin server and stores it in the cache memory before returning anything.

Caching Strategies With RabbitLoader

Implementing the above caching strategies on your website requires extensive technical knowledge. When you are using RabbitLoader for your website’s pageSpeed optimization purpose, it will take care of your caching strategies.

By implementing an advanced browser caching mechanism, RabbitLoader stores all your static resources, such as HTML, stylesheets, scripting, and media files, in the browser cache. It allows you to set a maximum time for how long the content can be cached, ensuring that the content is state-free.

Even, when content is updated on your website, RabbitLoader will automatically update the content in the cache by it’s caching strategies.

RabbitLoader helps you reduce the distance between server and user by disturbing the content between 300+ edge servers, which are available in its premium CDN, minimizing the latency.

Therefore, when you are looking for an effortless solution, RabbitLoader is the best option for implementing caching strategies in your website.